Status: Draft

Scenario Description

To autonomously drive a vehicle in general, Convolutional Neural Networks are commmonly used [LEC98]. A research team has proven, that a complex architecture is capable to drive a car by using an end-to-end concept [NVI16]. Therefor a paradigm called Imitation Learning has been applied [HUS17]. Hereby, the model is trained supervised and the action is mimiced.

Furthermore, another paradigm, Reinforcement Learning [SB98] is eligible. In Reinforcment Learning, a reward system is required for training instead of labeled data. Based on this approach, researchers sucessfully trained models, which are capable of solving different complex tasks [KOR13], also in the continuous action space [SIL14][LIL15].

Robotic / Autonomous Driving

|

|

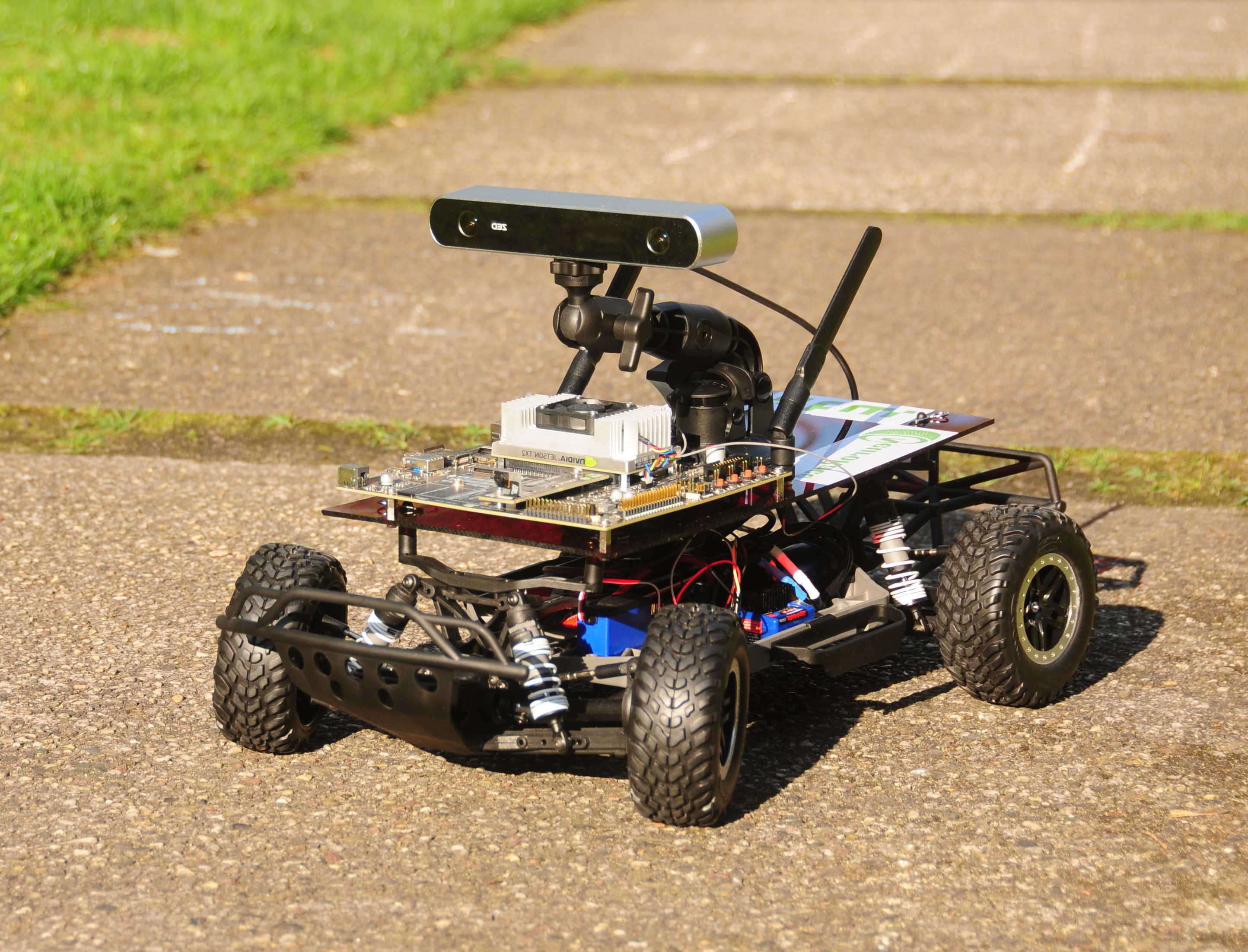

Based on the Supervised Learning approach a real word prototype racecar in scale 1:10 is built and equipped with a camera. Data can be recorded to train a model, capable of driving autonomously on a random track with pylons as borders (pylons are also in scale 1:10). Further sensors and different cameras can be applied to the real world prototype as replacement or in addition to the used camera. With the paradigm of Reinforcement Learning in mind, a simulator [KH04] in combination with the Robot Operating System [QUI09] and OpenAI Gym [BRO16] is used, to provide the possibility of training networks by different reward functions. The MIT-Racecar project is used as base for the simulated version of the real world prototype. The design of the implementation is split into standalone modules. This architecture allows to train a model by Imitation Learning in real world or a simulated environment and afterwards, to improve it further with Reinforcement Learning. As the basic system, Linux in combination with ROS is used.

For further details see